A couple of months ago I got a couple of wonderful birthday presents. My lovely geeky girlfriend got me two Western Digital 500 GB SATA 3.0 drives, which were promptly supplemented with a 3ware 9550XS 4-port hardware RAID card. Immediately I came up with the idea for this article. I had just read up on mdadm software RAID (updated reference) so I though it would be perfect to bench mark the hardware RAID against the software RAID using all kinds of file systems, block sizes, chunk sizes, LVM settings, etcetera.

Or so I though… As it turns out, my (then) limited understanding of RAID and some trouble with my 3ware RAID cards meant that I had to scale back my benchmark quite a bit. I only have two disks so I was going to test RAID 1. Chunk size is not a factor when using RAID 1 so that axis was dropped from my benchmark. Then I found out that LVM (and the size of the extends it uses) are also not a factor, so I dropped another axis. And to top it off I discovered some nasty problems with 3ware 9550 RAID cards under Linux that quickly made me give up on hardware RAID. See The trouble with 3ware RAID below. I still ended up testing various filesystems using different blocksizes and workloads on an mdadm RAID 1 setup, so the results should still prove interesting.

Hardware used

I ran all of the benchmarks on my home server: A HP ProLiant ML370 G3. Here are the specs:

- Dual Intel Xeon 3.2 Ghz with hyperthreading, 512 KiB L2 cache and 1 MiB L3 cache each (giving me four CPUs)

- 1 GiB RAM

- Adaptec AIC7XXX SCSI RAID with four 36.4 GiB disks in RAID 5 and two 18.2 GiB disks in RAID 1

- 3ware 9550SXU 4-port SATA 3.0 RAID with two 500 GiB disks in JBOD, running in mdadm software RAID 1

- Debian GNU/Linux 4.0 (Etch)

Yes, it's probably a bit overkill for a home server :-) When doing write speed benchmark, the files were read from the RAID5 unit which can read at about 150 MiB/s, much faster than the 3ware mdadm RAID 1 is able to write. That means that the RAID5 read speed should not have affected my benchmarks.

The trouble with 3ware RAID

The hardware RAID card has been giving me a fair bit of trouble. I bought it because I needed a SATA card that was compatible with PCI-X. Most of the "cheap" software RAID cards out there only support regular PCI, which is too slow. As soon as you start using three or four drives you hit the limit of the PCI bus. My server supports 64bit PCI-X at 100 Mhz which is more than fast enough. Sadly I couldn't find a simple SATA card with PCI-X support so I bought the expensive but high quality 3ware 9550SXU instead.

My problem with the 3ware card was that I couldn't make it go faster than 5 MiB write speed using its hardware RAID 1 features. As it turned out after contacting their help desk, the cards don't go any faster unless you enable the write cache. I had not enabled that feature because 3ware recommends not using it unless you have a battery backup unit to protect the cache in case of a power failure. When I did enable it, the speed was roughly equal to mdadm software RAID, but you run the risk of loosing data some on a power failure. In short, the cards are useless unless you buy an extra BBU for the cards. Strangely enough if you put the disks in JBOD mode, it does work at full speed. Even stranger, in JBOD mode the disks are roughly 4 MiB per second faster at writing with the write cache disabled (85 MiB/s as opposed to 81 MiB/s with write cache enabled in JBOD mode).

I ran a few tests and there was little difference in speed between using JBOD+mdadm or hardware RAID 1 with write cache enabled. I chose to trust Linux more than the hardware and went with mdadm. I read a nice little trick which makes mdadm very useful. When a disk fails in hardware RAID you need to replace it with an equal sized disk or larger. This can be a problem since not all 500 GiB disks have the exact same block count. It varies. If the new disk is smaller you need to shrink and rebuild the RAID. With mdadm you can create a partition that does not quite fill up the disk. When one disk fails, you can put in another disk, create an equal sized partition and be up and running soon. And variation in block count is absorbed by the unused disk space.

The benchmark setup

At first I tried benchmarking my new RAID unit using Bonnie++ and IOzone to determine what the best setup would be for me. I had some problems with both these programs. Bonnie++ only tests the hard drive, not the filesystem. It showed me write speeds of over 80 MiB/sec but these don't come near real-world performance. IOzone is able to test the filesystem as well, but I found it far too complicated to interpret the results. I never found a decent explanation of the "record size" attribute that IOzone tests, and since the results varied widely dependent on that record size, I could not interpret the results correctly. This is not so much a problem with Iozone as it is with my lack of understanding the results. Iozone is an expert tool and I am no expert. It produces vast amounts of data but I don't know how to map that to my typical workload.

Update: The Iozone team sent me an email explaining the record size attribute:

Record size is the size of the transfer that is being testing. It is the value of the third parameter to the read() and write() system calls.

So, instead of using a standard benchmarking suite I wrote my own benchmark based on real work that is performed by my server. My server mainly does three things:

- It receives and processes my backups, which are mostly made up of many small files (95% of it are source code files).

- It hold my music collection. Occasionally it receives a large batch of new ogg files (whenever I have time to sit down and start ripping my CD collection).

- Occasionally I store some images on it (as in disk-images). These are multi-gigabyte files.

I decided to do four tests:

- Reading a number of files, by outputting their contents to /dev/null.

- Writing to the filesystem, by copying files from my primary RAID unit to the filesystem. The average read speed of my primary RAID is much faster than 90 MiB/sec which is the theoretical maximum of my software RAID according to Bonnie++, so there should be no interference.

- Reading and writing at the same time, by copying files from the filesystem to a different location on the same filesystem.

- Deleting the files

I ran all these tests for three collections of files: A collection of 10928 small source code files (maximum size a few KiB each), a collection of 1744 Ogg Vorbis files (around 4 MiB each) and a collection of 3 multi-gigabyte files. Each test was run three times and the results were averaged. In between each test I ran the `sync` and `sleep` commands to make sure that caching would not interfere with the test results. All tests were performed on a 200 GiB mdadm software RAID 1 with the following filesystems:

- XFS with 1 KiB blocksize

- XFS with 4 KiB blocksize

- Ext3 with 1 KiB blocksize

- Ext3 with 4 KiB blocksize

- ReiserFS with 1 KiB blocksize

- ReiserFS with 4 KiB blocksize

- JFS with 4 KiB blocksize

It was not possible to create a JFS filesystem with 1 KiB blocksize, so it's missing from the test.

Results of the benchmark

Below are the results for all the filesystems, split out per test and per file collection.

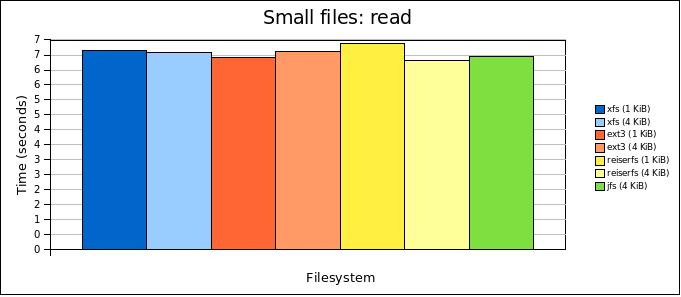

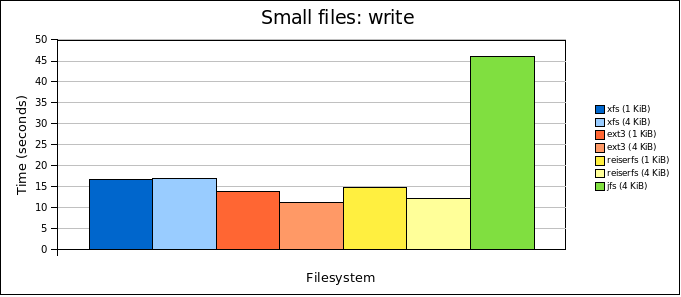

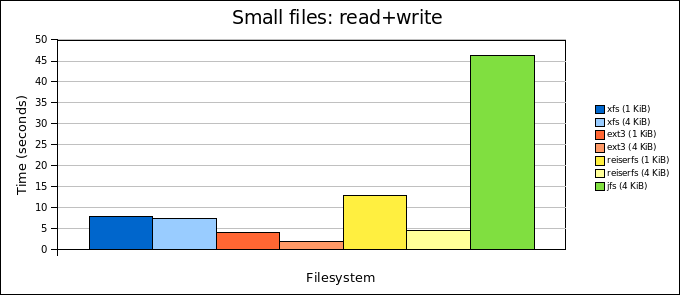

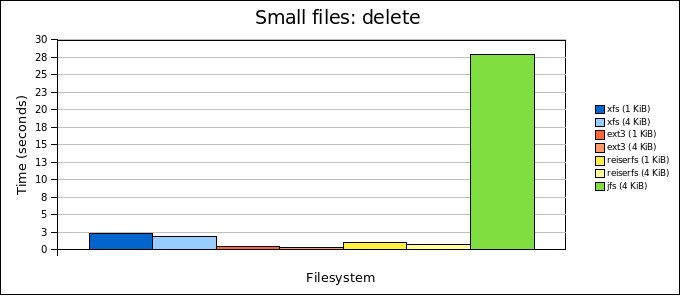

Benchmarking small files

The benchmark using 10928 small source code files, maximum a few KiB each

Reading speed is about the same across the board. You can see immediately how slow JFS is writing and deleting all these files. What really strikes me is how slow ReiserFS is. ReiserFS is always touted as well-suited for large amounts of small files, but in my benchmark ReiserFS is outperformed by Ext3. As expected, XFS is a bit slower than ReiserFS and Ext3. XFS is usually touted as well-suited for large files.

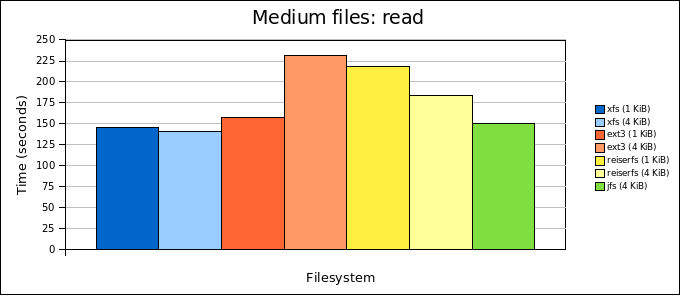

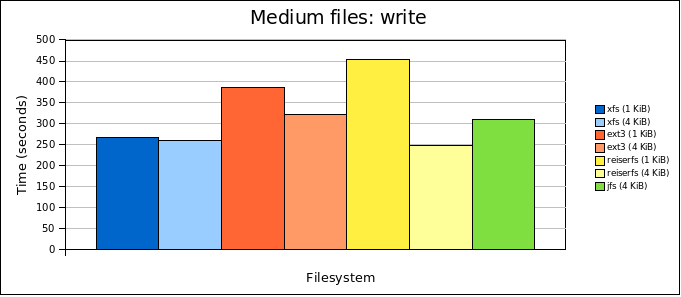

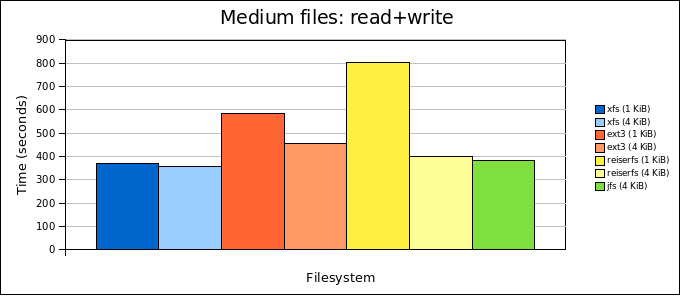

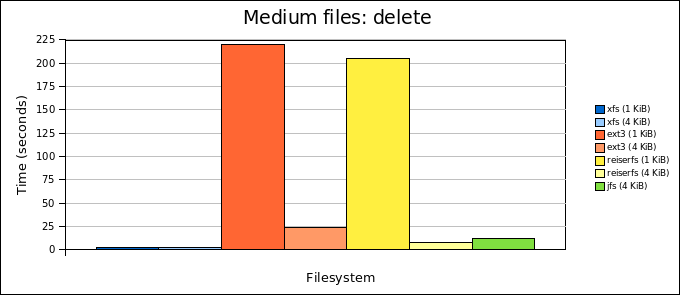

Benchmarking medium-sized files

The benchmark using 1744 Ogg Vorbis files, on average about 4 MiB each

From this benchmark you can clearly see that XFS is better suited to larger files. It outperforms all other filesystems on all tests. There are two things that strike me here. First off, Ext3 with a 4 KiB blocksize is much slower reading than Ext3 with a 1 KiB blocksize. You would expect it the other way around. Second, both Ext3 and ReiserFS are very slow to delete these files when they have a 1 KiB blocksize, but their speed is on-par with the rest under a 4 KiB blocksize. I expected some difference, but not this much.

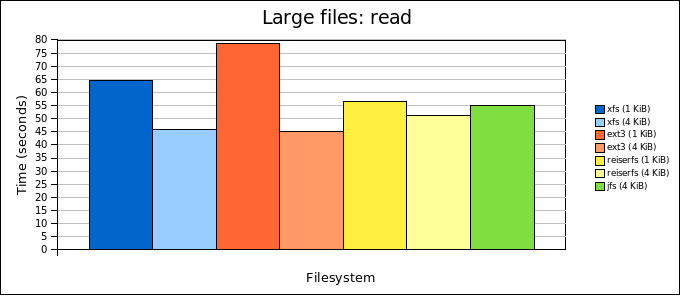

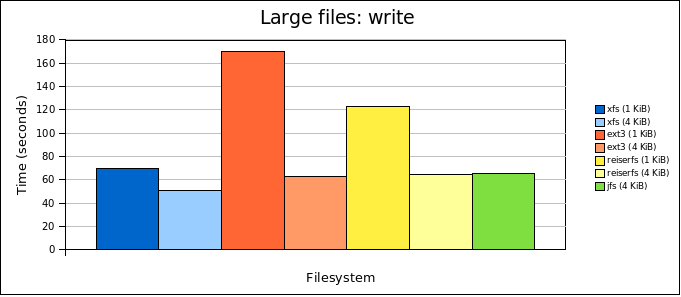

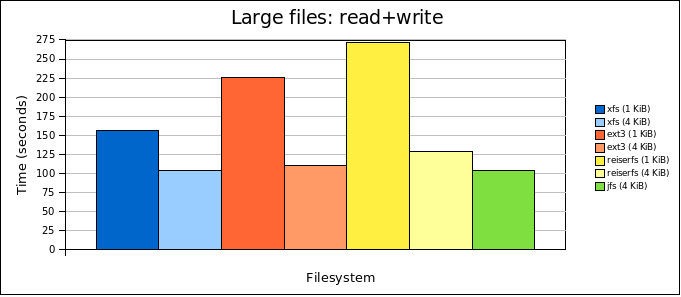

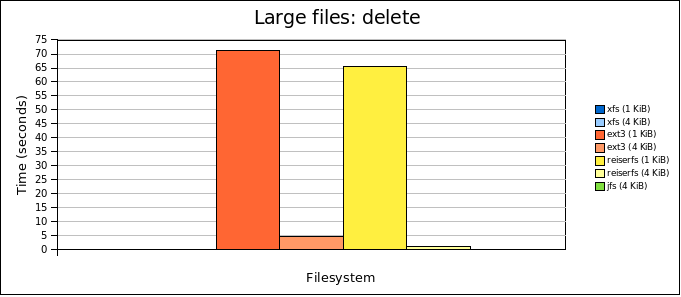

Benchmarking large files

The benchmark using 3 multi-gigabyte files

In this test we see again the abysmal poor performance of Ext3 and ReiserFS on a 1 KiB blocksize when deleting files. The rest of this test isn't much of a surprise. 4 KiB blocksizes are faster than 1 KiB blocksizes and at 4 KiB, the filesystems perform about equally. It seems that the advantage of XFS in comparison to Ext3 is lost again with really large files.

Conclusion

I'm not going to make any general conclusions from my benchmark. My tests are pretty non-standard and so is my hardware setup. I chose to use XFS with a 4 KiB blocksize after these tests. It's clearly faster when dealing with my Ogg Vorbis collection and not much slower when handling my backups (small files). These are the two tasks that I do most often on my server.

Future work

I'm probably going to get comments from some of you why I didn't test Reiser4, Ext4 or some other filesystem. I didn't test these because they are not quickly to set up under Debian Etch. They require work, compiling kernel modules, etcetera. I'd like to test these if they are easily available under Debian Lenny after it is released but I will need to buy some extra hard drives if I am going to do that. There is more data on my software RAID than will fit on my hardware RAID so I can't shuffle the data around to test again with these disks.

One thing I would like to do in the future (when I have more disks) is to re-run these benchmarks on a RAID 5 array and vary the chunk size. As the Linux Software RAID HOWTO says, the combination of chunk size and block size matters for your performance. For a RAID 1 array this doesn't matter since there is no chunk size to deal with. Then I could compare those tests with RAID 1+0 and RAID 0+1. But all that is for another time.

I hope these benchmarks were in some way useful for you!

Comments

#1 Anonymous Coward

2. You need to note the mount options. Some filesystems like ext3 do ordered journaling, whereas XFS does simply meta data journaling. On most contemporary implementations of Reiserfs, ordered journaling is the default... but it does matter... so need to list that stuff.

Welcome the wondrous world of benchmarks.

#2 Sander Marechal (http://www.jejik.com)

Okay. Then could you perhaps explain why the numbers for bonnie++ were much higher than the numbers I found? Bonnie++ gave me about 85 MiB/s while my own tests peaked out at around 64 MiB/s.

I have used the default mount options on Debian GNU/Linux 4.0 (Etch).

#3 Anonymous Coward

#4 Eric

http://sidux.com/PNphpBB2-viewtopic-t-5275.html

All real world tests, and not graphed... maybe you would like to graph them for content for you?? :) I never really had a chance to make it really public, or care to have a blog, so you can publish my results as a user follow if you like. I would also quite enjoy seeing my results graphed and published for some use other than my own testing. To note, the logbufs=8 mount option is a major increase, aswell as using a 128 MB internal/external xfs log when creating the filesystem.

Enjoy !!

#5 Anonymous Coward

It is easy to do so via

#echo deadline > /sys/block/sda/queue/scheduler

#6 Sam Morris (http://robots.org.uk/)

#7 Figvam (http://figvam.blogspot.com)

You can include output of "/bin/mount" for the tested partitions to list the mount options for those not having Debian Etch installed.

#8 Anonymous Coward

It would be awesome if you tested against btrfs as well !!

http://en.wikipedia.org/wiki/Btrfs

#9 Anonymous Coward

#10 Sander Marechal (http://www.jejik.com)

But, at some points these drives fill fill up and I will get new ones. Then I'll re-run all the tests with all of your recommendations included and also so some RAID5 test for comparison. I hope by that time Ext4 and Reiser4 will also be in Debian so I can test those as well.

Figvam: Here are the default mount options in Debian (as given by /bin/mount):

/dev/mapper/3ware-test on /mnt type xfs (rw)

/dev/mapper/3ware-test on /mnt type ext3 (rw)

/dev/mapper/3ware-test on /mnt type reiserfs (rw)

/dev/mapper/3ware-test on /mnt type jfs (rw)

Anonymous Coward #9: Yes, I have used those when I attempted to solve the 5 MiB/sec issue. I've changed the nr_requests and blockdev settings and changed the scheduler to deadline. None of them made any significant improvements. Enabling write-cache did bring normal performance but you run the risk of data loss on power failure. Afterwards I reset the values.

Eric: Your benchmark seems to correlate my own. The main difference between our tests is that your test doesn't show the same bad JFS performance on small files as I see in my test.

As for writing up your tests: I don't have the time to work it all out now. I'm leaving for a long vacation in Greece in a few days. But if you write (and graph) up an article I'd be happy to host it here as a guest blog post. Alternatively you're also welcome to submit it to LXer Linux News. Disclaimer: I'm an editor for LXer. We're always on the lookout for fresh articles there :-)

#11 Anonymous Coward

#12 Sander Marechal (http://www.jejik.com)

I live in a tiny 35 square meter apartment at the moment so it's all the room I could spare. When I buy a proper house I'll get a proper server room with a proper A/C and a proper UPS. Doing all that now is just a waste of money.

#13 James K. Lowden

#14 Anonymous Expert

#15 Sander Marechal (http://www.jejik.com)

#16 Mark Lord

I use Linux under MythTV for my PVR box here, and mistakenly used ext3 as the underlying filesystem.

Myth routinely records programs into 30-50GB files -- now those are large!

And ext3 takes 1-3 minutes to delete one of them, whereas XFS apparently can do the same job in 1-3 seconds.

Next time, I'm using XFS for these huge files.

Cheers

#17 Eric

So whenever you get back, feel free to use it as your content lol, I really don't mind or anything, I'd encourage it if anything since it just helps spread the word on how awesome XFS is for most users and general majority of use cases.

Later, and I'll bookmark this to finally find it easier, is it phased out from my RSS reader, I forgot to mark it as Important...

#18 Anonymous Coward

echo 3 > /proc/sys/vm/drop_caches

#19 Anonymous Coward (http://dnet.com.ua)

I`m also tested my not very good controler ADAPTEC 1430SA and hard drives 2xWD7500AAKS (750 Gb 7200. 16 mb) with ext2,ext3,xfs filesystems on C2D 6420@2.13GHz +i965P

my results (copy 1GB file from sda1 to sdb1):

ext2 to ext3 ~ 60-65 mb/s

ext3 to ext3 ~ 70 mb/s

ext3 to xfs ~ 75 mb/s

xfs to ext3 ~ 75 mb/s

xfs to xfs ~ 80-85 mb/s

#20 Sander Marechal (http://www.jejik.com)

#21 Anonymous Coward (http://dnet.com.ua)

Sorry for my English )))

I`m use Gentoo and not use HARDWARE RAID, because drivers with this controller not open - only .rmp 8(. I patch sata_mv driver from Marvel (chip Marvel 7042 installed in this ADAPTEC).

Both sda1 and sdb1 use with this card. It have PCI-X interface (4x) and both sda1 and sdb1..

when have little free time i`m make some tests whis software raid 8)

#22 Duncan

Unless turned off with the "notail" mount option, reiserfs does "tail-packing", comparable to the XFS inode storage of small files (which I know little about, see below, so mention much below). Many filesystems, including ext2/3, use a whole number of blocks, the smallest number of blocks the file will fit within, to store the file. Thus, a 1-byte file will still take a whole block (in the test, 1k or 4k) when written to disk.

Obviously very few files are going to be a precise block size, so with most filesystems there's always going to be some waste. With particularly small files (<1/2 the block size) the amount of wasted slack-space at the end of the allocated blocks can exceed the actual data size. In general, the smaller the file and the larger the block size, the higher the wasted percentage is.

The reiserfs tail-packing feature eliminates this problem by packing multiple file "tails" (the portion of a file smaller than a single block, so the whole file where it's less than a block in size) into a single block, thus wasting very little space. (A few bytes of a tail block do tend to be wasted due to imprecisely matching tail and block sizes. As mentioned above, from what I've read, XFS has a different solution with a similar effect.)

A smaller block size is the usual workaround for this problem on non-tail-packing filesystems, ameliorating but not eliminating it. As a non-packing filesystem, it's thus relatively common to format ext2/3 filesystems with small block sizes, say the 1k you used in your examples as opposed to 4k.

With tail-packing, however, the usual major benefit of smaller blocks is lost on reiserfs, which therefore optimized for the default 4k block size. That (in addition to the simple fact of the extra overhead) is why 1k reiserfs performed so badly -- there was very little reason to optimize for that case and Namesys in fact didn't optimize for it.

I'd guess that this also explains the difference in performance you noted, which after all isn't that much. Reiserfs is in its default tail-packing mode and as such, is optimizing actual space used by these files, as opposed to access time only. Had you mounted the reiserfs volumes with the notail option, I believe you'd have seen somewhat faster results. You would have also noted the difference in actual space used, had you run a df before and after writing the files, comparing ext3 with reiserfs both with and without tail-packing enabled.

As mentioned twice above, XFS has a similar solution. However, back when I first considered it, it was infamous for zeroing out recently written data after power outages and the like. It had been designed for enterprise grade systems, and enterprise grade systems usually have UPS and power redundancy arrangements such that this wasn't a big issue for them. Thus, on my non-UPS home system, I decided XFS wasn't a good idea and ultimately went with Reiserfs.

However, in a comment on the LWN article pointing here, DGC (XFS/kernel dev ?) says they introduced a temporary fix for that back in 2.6.17, and finally fixed it properly with 2.6.22, so the problem shouldn't be an issue any longer. That was new (but welcome) information to me! =8^) With future reiserfs maintenance (beyond the basics) now in question, the various distributions and etc that supported it now planning on moving elsewhere, and reiser4 really looking like it may never hit mainline, and a personal issue with unnecessarily wasting all that space even if today's disks ARE so big, I had been wondering where I might eventually end up, so this is welcome news indeed. =8^)

[ I don't see any preview... Hopefully that LWN link posts correctly. My first posting attempt timed out. Hopefully hitting post comment again doesn't get this posted twice... ]

#23 MLS

As far as iozone and record size, from my understanding it's how the application packages it's writes (I/O), for example, Sybase is notorious for it's 2-4k I/O size. A large file can be sent as a ton of 4k I/Os or a smaller number of 256k I/Os. Of course understanding how your application will be affected by this means understanding how it writes.

#24 Anonymous

So I would like to suggest merging small files which may improve performance significantly!

#25 Nat (http://makarevitch.org/rant/raid/)

Comparing 3ware integrated functions to the 'md' Linux driver: http://makarevitch.org/rant/raid/

#26 OpenSuSE (http://sapfeer.ru)

#27 Anonymous Coward

This is of course blatantly wrong - 3ware (which I am not affiliated with) is apparently concerned about the safety of your data, which is why it recommends having the BBU in the first place.

So, the facts: with 3ware writecache enabled, you cna lose data in nasty ways without BBU

with the BBU, your data is perfetcly safe for short (48 hour) power outages, at maximum speed.

With software raid, you cna lose data in nasty ways - there is no BBU option available either.

Disabling write caching in the drives as recommended for software raid will reduce performance considerably, just as with 3ware, except your data is still not as safe, as it doesn't do write journaling.

3ware disables the drive write cache in any case, so is safe even at power outages when the controller write cache is enabled, if you use a moderm filesystem using barriers.

software raid is either very slow with the same setup (much slower than 3ware), or simply can't keep the data safe for power outages.

So the choice is between fast and reasonably safe with 3ware (or perfectly safe with bbu), and fast and always unsafe with linux software raid.

Or in other words, you brag about your problems with 3ware as opposed to software raid, when in fact the only difference is that the defaults with 3ware are safe (but you can make it unsafe), while ALL linux softwareraid modes are UNSAFE.

Thats hardly a problem with 3ware...

#28 Anonymous Coward

Comments have been retired for this article.