Lone Wolves

Web, game, and open source development

Displacement based rendering

By Joost van Velzen

Originally posted September 17, 2002

Introduction

Most 3d engines work with polygons. The principles I am going to describe in this article show that it is possible to build a high detail 3d engine without polygons. The purpose of this article is to convince you that 3d engines without polygons are posible and might even be better. Polygons have limitations, one of the limitations is that you require lots of polygons for complex shapes. One of the disadvantages is that polygons don't allow much deformations. One of the things i will show is that rippling water (quite complex with polygons) can be created easily with techniques that rely on displacement maps for shapes. I am convinced that the techniques and principles are a good alternative for standard polygon techniques. Since I am not that good at explaining things you will probably have to read the whole article twice.

Basic architecture

The displacement based engine uses its own type of objects to describe 3d objects. These objects can contain other objects (which can contain other objects, and so on). A difficult shape will be constructed out of several (smaller) objects. For example: A 'House' object could be constructed from a 'wall' object that contains a lot of 'brick' objects. Each object has a descriptor that describes what kind of object it is.

There are 4 basic types of objects:

- Positioners: these tell where to put an object in the 3d world with rotations etc.

- Clusterers: Objects that are build from other objects (inlude data about relative positions between objects, as well as rotations etc.)

- 3dData: Objects that describe colors,shape etc. with 'faces'.

- faces: these contain color and shape data.

I think you can imagine what Clusterers and Positioners do so i am going to skip that part and go on with the interesting stuff. The 3dData objects and the faces describe shape and color of a 3d object (clusterers are used to create more complex shapes from a collection of 3dData objects). It basicly works like this:

| Imagine a decorated ball. Put the ball in a glass box. The glass box has six faces. |

Ball in a glass box |

| If you would take a picture of each side of the box, you would have gathered enough data to reconstruct the decorations on the ball on a new white ball of the same size (it would be difficult but possible). Those pictures however are not enough if we would want to recreate the entire ball. Lets say we made perfect pictures and we saved them as bitmaps. |

color bitmap of a face |

|

We now can make an additional bitmap that we use to indicate if the pixel is part of the ball or not.

After we have done this we can create a depth map that tells us how far the ball is from the side of the glass box (for each pixel). |

Face displacement map |

This is almost enough information, but if the object would be looked at closely we would require some special tricks to boost the detail. So we are going to make 3 additional maps (x,y,z) to save vectors for each pixel directed from the center of the ball trough the surface. Imagine that the decorations were made with a special paint that is less reflecting than the plastic material of the ball. For these occasions we require an additional map to describe the way the material reflects light (very diffuse versus perfect mirror). Note that we would require the 3 vector maps (x,y,z) to calculate reflections correctly. It gets even more complex: The ball was made of a mixture of 2 types of plastic: transparent and purple coloured. So we require a transparency map. The glass box is like a 3dData object and it's faces like the 'face' objects.

3dData objects

A 3dData object represents the bounding box of a 3d object. The box typically has 6 faces but if a face doesn't contain interesting data it is useless (NULL). 3dData objects can describe the most complex shapes but they cannot describe hollow shapes with great detail, in that case you require a clustered set of 3dData objects. With clustered sets of 3dData objects you can describe any shape you like.

A few examples:

- (almost) flat ground: you won't look at it from below or sides so your 3dData object only requires one face.

- a mug: you will require 1 3dData object for the exterior of the mug and 4 3dData objects (each one face) for the hollow inside of the mug. You could ofcourse divide the mug into 4 pieces so you would require only 4 3dData objects, but you would require a lot more faces so it isn't a smart move.

- a sphere: this fits nicely inside a box so one 3dData object with 6 faces will do.

- a wire fence: the wires don't have much detail on the sides so one 3dData Object with 2 faces will do.

Faces

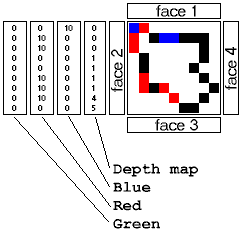

This is the good part. A face contains all the shape and colour information of a piece of a 3dData object. A face is build out of layers. It has 3 color layers(Red, Green and Blue) a transparency layer, a depth map and reflection data (4 layers). This seems a whole lot of data but a face also has a descriptor with an on/off switch and default values for each layer. For example: a solid white cube:

No colour layers required, only one default value required.

No tranparency layer required: 0.

No depth map required: 0.

No reflection data required, all reflection layers can use default values.

Note that the 3dData object doesn't store the position in the 3d world so the 3dData object should always can always be used in the optimal directions.

Depth maps

This is like the heightmap that is used in terrainrendering. The depthmap specifies the distance between the 'pixel' and the face of the bounding box.

Rendering

Rendering is always complex so I will only explain the basic idea behind the rendering system. The first trick is that the engine will not just render a 2d view but a new face (with depth map). This makes a lot of nasty checking obsolete. For example: if an object is inside an other object no special checking is required because depth data is saved in the view map. The second trick is that the engine will use more than one face if required. This is usefull for transparency etc. If an object is rendered all faces are drawn on the 'view-face'. The view face is a face with perspective. This makes the calculation a bit complex but has the advantage that anything drawn on the face is in fact visible on the screen. A lot of effects are calculated in the 'view-face' this saves a lot of time, because only visible effects are calculated.

real time Light

I had figured out a bit special solution for real time light calculations that i do want to explain here. The problem with complex shapes and tranperency is that you have to calculate all kinds of weird shadow effects (especially with fog). The solution to this problem is to divide it in smaller problems. Every object has a list of other objects that it receives light from and a list of objects that it sends light to. for example:

The sun shines trough a tree on a blanket on the ground.

The sun sends light to the tree (the tree receives light from the sun).

The tree changes the light and adds a complex shadow mask and sends it to the blanket (the blanket receives light from the tree).

If something would change (for example a balloon between the sun and the tree) every object 'downstream' would have to calculate the changes in that order. It's a kind of tree system for light calculations. My idea was to use 'light-beam' objects to contain the lists and the shadow mask.

real time deformations

Imagine a face that represents the water in a pool. The facedesciptor now says that the reflection layers and the depthmap are 'watereffects'. Just by saving a few input values you can use a water-effect algorithm that gives you water that ripples. At the next time-index you just recalculate the layers. More complex deformations are also possible as long as it can result in new layers or new objects. A good algorithm to use for the water would be the x-water routine. This algorithm can calculate displacement maps for water very quickly. Unreal used this algorithm to calculate their water effects on the textures. They were however still 2D textures. With these 3D deformable faces you can make real 3D water.

Summary

Polygon engine's have restrictions. A polygon engine can be pretty fast but the shapes must not require lots of polygons. Displacement based 3D rendering is probably slower but it can handle far more complex shapes,reflections etc. and with displacement based 3D rendering deformations are possible to some extend. Conclusion: Displacement based 3D rendering has a lot of possibilities.

The end

I hope you found this article interesting. I know I haven't fully explained everything (it's more a brief introduction) so if you have questions i am willing to answer them. I can imagine lots of good questions myself.